Running a home server is an ongoing practice, the possibilities are endless, and every new package, every new configuration, comes with new challenges.

I’ve got a dozen web services running, including SearxNG, Plex, Syncthing and more..

On some of them I rely daily, while others are used scarcely but they’re still important.

A real struggle has been remembering damn port numbers for each and every service.

What was the port for Plex? was it 8342? or 3442?

I often mixed them up, and occasionally had to look-up the port by ssh’ing and running ss -ntplu | grep service just to check a port number. 🥲

On top of that, there’s the lack of TLS — definitely not ideal, even for a local network.

So recently I’ve decided to put an end to it, stop procrastinating and set things straight:

I want to be able to navigate to each service on my homeserver by simply writing: service.box.local in my browser, without having to specify the local IP or port.

We will get right to it, but first, a quick intro to TLS:

TLS (the S in HTTPS) is a security layer that uses asymmetric encryption in order to encrypt data a transit between two parties.

When a client connects to a HTTPS server, it presents the server’s TLS certificate to the client.

The client then needs to verify that the server’s certificate is valid and signed by a known and trusted Certificate Authority.

A good start

Once a sunny day came by, I started by setting up Uptime Kuma which is a nice infrastructure service that served me with two purposes:

- By design - its an uptime tracker, and actually a good one. Notifying me if any of my services is down for a while.

- And for me, it served as a dashboard, aggregating all my services in one web UI.

It was a pleasure setting up and configuring, and at the end, there was a full dashboard that I could find all my services on it!

No more ssh’ing and grep’ing to find some port number!

But it was not enough yet.

I still had to browse my server by its internal IP address like it was some basic trivial server… unacceptable. 🤮

Something had to be done..

And that something was to…

Install a ✨ Reverse Proxy ✨

The Reverse Proxy

Choosing the right proxy was an a straight forward process.

I wanted it to be both easy, and secure, and modern.

First association that came to mind was Nginx intuitively, an established major player in the category.

But it was discarded quickly from my thoughts after recalling some really bad experiences trying to configure it… Ah, dark memories.

Really its more of a mature piece of technology, very strong and stable (once configured properly), but probably not the best fit for my usecase.

The next big contender was Caddy.

Caddy has left a good impression on me since the first time that I used it a few years ago.

After reading the documentation, my memory was refreshed, and I was confident that its a good fit.

Caddy is modern, easy to use, secure and open source.

The emphasis on ease of use was especially appealing.

Another honorable mention is Traefik:

Traefik is a relatively new proxy, oriented for the cloud and packed with modern features like service auto-discovery and more, the architecture is great and all, but not exactly what I was looking for.

Caddy really ticks all my checkboxes 😌

One moment before we jump into configuring it,

I want to be able to access my server by a domain name instead of the local IP address.

For that we will have to configure the local DNS.

I will be using the name: box.local

Inside my Unbound DNS server config I create a new rule for my domain:

local-zone: "box.local" redirect

local-data: "box.local A 10.10.10.10"And now we can approach configuring our beloved Caddy.

I created a Caddyfile (aka caddy’s config file) with a dozen different subdomains pointing to my various services, e.g:

...

# Plex

plex.box.local {

reverse_proxy localhost:5000

}

# Uptime Kuma

up.box.local {

reverse_proxy localhost:3001

}

...Looks amazing, lets try it out!

Plot thickens

It was showtime, I was ready to see the product of my evening session..

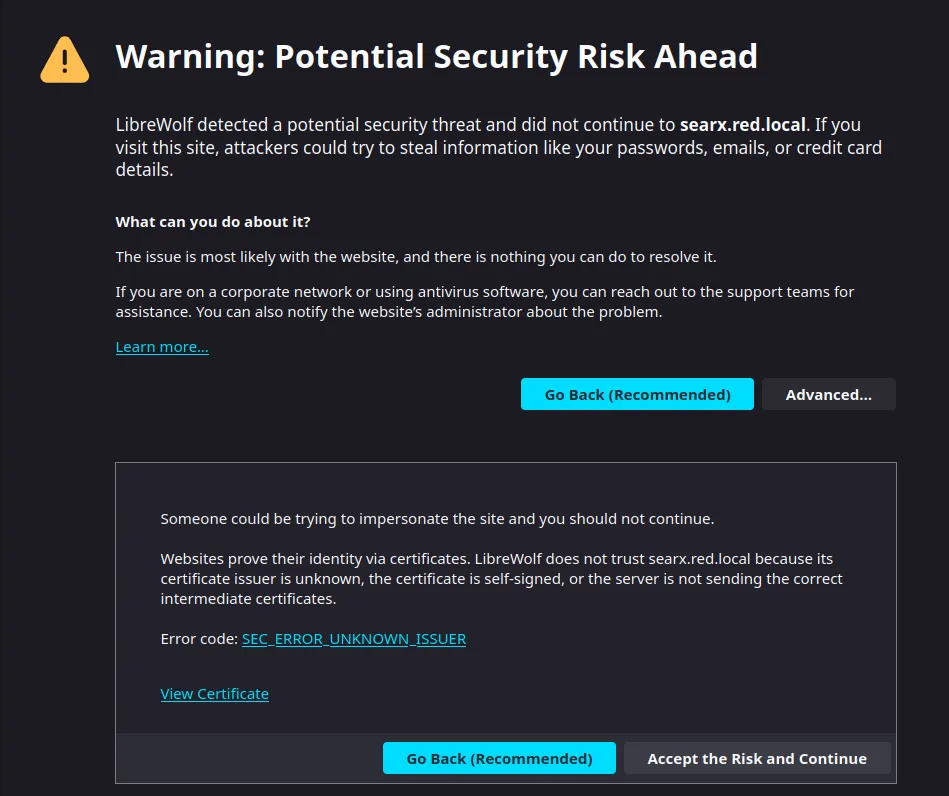

But something unexpected appeared before me, it was no other than

The Untrusted certificate browser warning!

It’s so ugly! (🤢)

And it takes me 2 whole mouse clicks to get past it!

At least it remembers my decision for as long as I keep my browser open.. 😅

Ok, enough complaining, lets get to it.

SEC_ERROR_UNKNOWN_ISSUER

That’s no surprise, I’m using a local domain name, which is only accessible locally within my LAN, so ACME protocol is not able to validate my domain externally and yield an actual valid TLS certificates for the domain.

(I could verify this by looking at caddy’s log file)

Therefore it looks like Caddy uses some internal fallback certificate to serve TLS, possibly autogenerated.

By clicking “View Certificate”, it says:

Caddy Local Authority - ECC Intermediate

So that confirms my assumption about the certificate being some kind of a fallback hardcoded/autogenerated one from caddy.

Ok holdup, It’s totally unacceptable!

The right thing to do would be to create my own Certificate Authority.

Certified Certificate Certification

Earlier, the warning that I’ve got (SEC_ERROR_UNKNOWN_ISSUER) was essentially saying that the CA who signed the cert is unknown to the browser.

At this point we know that we need to create a domain certificate and make it trusted.

So the plan goes like this:

- Create a set of private & public keys which will be the CA itself.

- Create a set of private & public keys, signed by the CA which will serve as the domain certificate for my Caddy proxy, this will be the cert sent to the clients when they connect via HTTPS.

- And lastly, we will have to import the CA on the client machine(s) so they will be able to validate the signature of the domain certificate.

Part 1: Creating the CA

Surprisingly, creating the keypair that will represent the actual CA is really simple, just a plain keypair with no magical features:

# For Elliptic Curve algorithms:

openssl ecparam -genkey -name secp384r1 -out localCA.key

# For RSA:

openssl genrsa -out localCA.key 4096Notice that I’m encouraging you to use stronger encryption (EC-secp384r1 and RSA-4096), but you may choose differently as you see fit.

After creating our CA’s private key, we continue by generating the certificate for our CA:

openssl req -x509 -new -key ./localCA.key -sha384 -days 3650 -out localCA.crtThe first part of the command:

openssl req -x509 -new -key ./localCA.key

Tells openssl to create a self-signed X509 certificate, it will be signed by our private key that we just created.

And the second part:

-sha384 -days 3650 -out localCA.crt

Describes the parameters and the output file.

When executing this command you will be prompted for various fields, those will compose the certificate identity, and will be identifying your CA.

Note:

If you’re not doing this as a solo hobby project, but rather professionally and other people are involved, you really should fill in those fields or use a cnf with the -config argument.

To make the created certificate identifiable.

Okay! We officially have a CA now! 🪩

Part 2: Creating our domain certificate

A very similar process, creating a private key, and generating a x509 certificate.

But this time we will sign the x509 by both the adjacent private key AND by the CA’s key.

Additionally, We will use a CSR (Certificate Request) and an ext file for defining the domain name and various other parameters.

# Step 1: Creating a private key

openssl ecparam -genkey -name secp384r1 -out box.local.key

# Step 2: Creating a CSR

openssl req -new -key box.local.key -out box.local.csrNext we will prepare an EXT file which will contain parameters for our future X509:

Creating a file named v3.ext (because it contains x509 v3 extensions)

# v3.ext

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[alt_names]

DNS.1 = box.local

DNS.2 = *.box.localNow we can finally create our domain certificate, using the CSR as input, the v3.ext file as extensions file, and the CA keypair as the signer:

openssl x509 -req \

-in box.local.csr \

-CA localCA.crt -CAkey localCA.key -CAcreateserial \

-out box.local.crt -days 365 -sha384 \

-extfile v3.extThis command essentially performs all the magic, it uses the Certificate Request to generate a new fully-signed certificate.

The resulting box.local.crt is the final product, our signed domain certificate :)

Using my local domain certificate in Caddy

Back to my homelab scenario, I want Caddy reverse proxy to use my newly created domain certificate.

I moved the certificate into a directory at /etc/caddy/certs and set unix permissions to RO:

-r--r----- 1 root caddy 904 Sep 8 18:58 box.local.crt

-r--r----- 1 root caddy 359 Sep 8 18:42 box.local.keyLater I added the following lines to my /etc/caddy/Caddyfile:

box.local {

tls /etc/caddy/certs/box.local.crt /etc/caddy/certs/box.local.key

}After restarting Caddy service I was relieved to see that the web server now uses my domain certificate :)

I was able to confirm by inspecting the certificate directly in the browser and in comparison it matches exactly box.local.crt

Only thing left is to import our custom CA…

Importing the CA in Arch Linux

I’m using Arch, and those are the steps I had to take to import the CA system-wide on Arch.

Note that it probably will be a different process for every major OS (including other Linux distributions).

After copying the public certificate (localCA.crt) to my machine I executed the following commands:

# Copy the cert to system ca-certificates with user=0, group=0, permissions=400

sudo install -m 400 -o root -g root ./localCA.crt /usr/local/share/ca-certificates/

# Update ca-trust

sudo update-ca-trust

sudo trust anchor /usr/local/share/ca-certificates/localCA.crtAnd finally, Everything works!

Now writing https://up.box.local in my browser opens the page via TLS, without any warnings, and in the TLS info I can see my certificate being used 😄

I have reached enlightenment